Terraform Remote State on AWS

Stap-by-step guide to configure terraform remote state on AWS

This guide demonstrates how to configure Terraform to store its state file in an Amazon S3 bucket. By storing state remotely, teams can work collaboratively and the state is kept secure and consistent.

The source code is available on github

Prerequisites

1. You have an AWS account.

Ensure you have credentials stored as environment variables. If not already stored in your ~/.aws/config and ~/.aws.credentials config, create a .secrets file in the actual terraform folder, hide it from your version control system (e.g. .gitignore) and put the following environment variables in it:

2. Terraform installed.

Terraform should be installed — follow the official terraform guides or use your preferred packet manager like brew on MacOS ( brew install terraform).

Terraform configuration

1. Initial terraform files

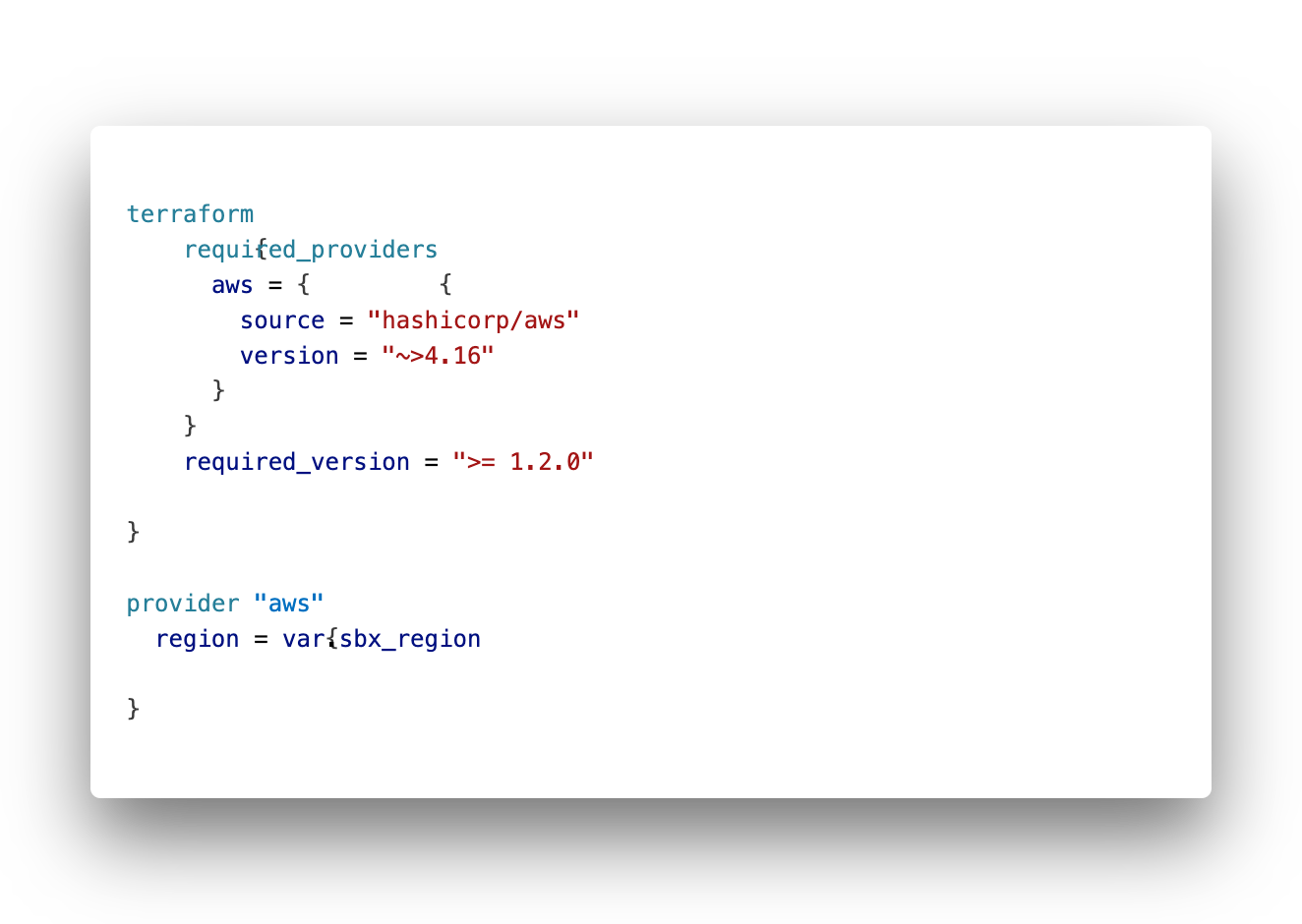

Following a standard approach we’ll separate the terraform configuration in different files. We start with main.tf for the general configuration like provider settings (the backend config will go hier later on as well).

All variable definitions will go into variables.tf, the S3 bucket will be declared in storage.tf and for the default variables we’ll use terrafrom.aut.tfvars file. Last but not least we’ll use DynamoDB as a key-value store for storing file lock information; declaration for DynamoDb will go into dynamodb.tf In theory you can name these files whatever you want, as long there is a main.tf and all files have the ending .tf.

Only exception here is the file ending with .auto.tfvars; this is a special file used by terraform to get values for variables defined by the key variables{}. Terraform has the option to read environment variables (TF_VAR_<variable>) or have variables handed over by the command line, but for the scope of this tutorial we’ll go with a terraform.aut.vars file.

Lets start with main.tf:

2. S3 Bucket Declaration

Now we have to declare the S3 bucket we’re planning to use for remote state. Right from the beginning we should add some security nd reliability to the bucket configuration. We will prohibit accidental deletion, enable versioning to have a history of state changes. further more we will enable server-side-encryption and last but not least prohibit public access.

All of this will be configured in a file named storage.tf as follows:

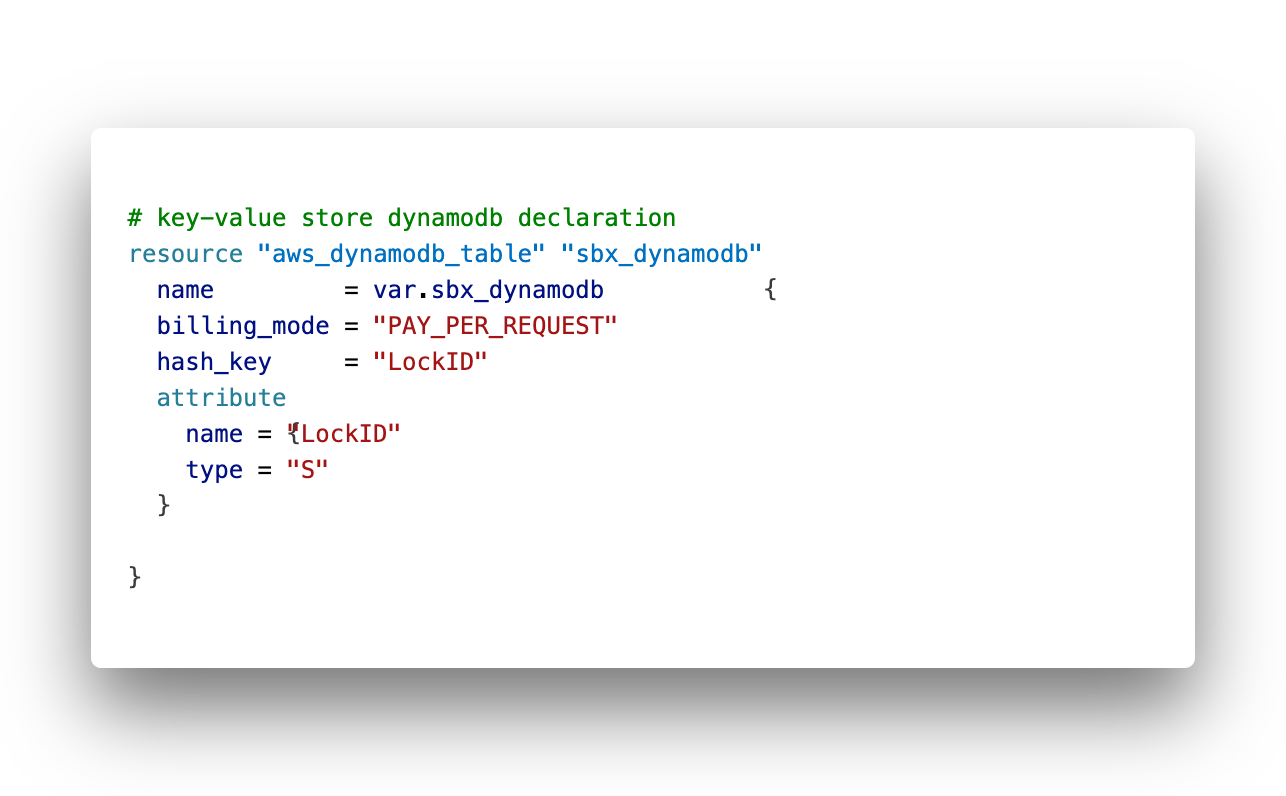

3. DynamoDB for locking

To have a consistent locking on our state files, which is strongly recommend if multiple users or CI/CD pipelines will parallel deploy to our infrastructure via terraform.

To ensure consistent reads and conditional writs we will configure a distributed key-value store by using AWS DynamoDB.

Create a dynamodb.tf file and place the following code in it:

4. Initialization

The stage is prepared, we now have everything in place for the first initialization run.

Ensure your AWS environment variables are set, all files ar saved to disk.

Carry out a “terraform fmt” for proper formatting (not required), a “terraform init” for downloading the providers and a “terraform validate” to check for any typos. Check via terraform plan, if everything can be provided:

When everything is prepared, carry out a “terraform apply” to bring the prerequisites into life:

5. Configure Terraform Backend

In your Terraform configuration (e.g., main.tf), define the backend to use the S3 bucket and place this backend information in the main.tf in the terraform provider declaration.

As terraform need this information *before* it reads any variable definition, we can’t use variable names here. Ensure that you use the same values as defined in the terraform.auto.tfvars file.

Our main.tf should now look like this:

The variable definition in variables.tf:

And our values for these variables goes into terraform.auto.tfvars like this:

6. Initialize Terraform Backend

Run the following command:

This will initialize the terraform workspace and configure the backend to use the S3 bucket we defined before. You’re asked to confirm the switch from local backend to the remote storage.

Once Terraform is done with the setup you can look into the S3 bucket on the AWS console to see the state file is in place.

Final Considerations

Always ensure that your S3 bucket permissions are tight and don’t allow public access. Use IAM policies to restrict access. It is recommended to do any changes via terraform to keep the setup in sync and let terraform handle any changes.

Conclusion

This is only a starting point, the options of terraform and AWS are endless..

Happy InfrastructureCoding !